In recent years, neural networks have become a cornerstone of artificial intelligence (AI) and machine learning (ML). From powering speech recognition in your smartphone to enabling autonomous vehicles, neural networks are transforming the way machines understand and interact with the world.

What Are Neural Networks?

Neural networks are computational models inspired by the human brain's structure and functioning. They consist of interconnected nodes called neurons or artificial neurons, which process data and pass information to one another. This interconnected web allows neural networks to learn complex patterns and make predictions or decisions.

The Basic Structure of a Neural Network

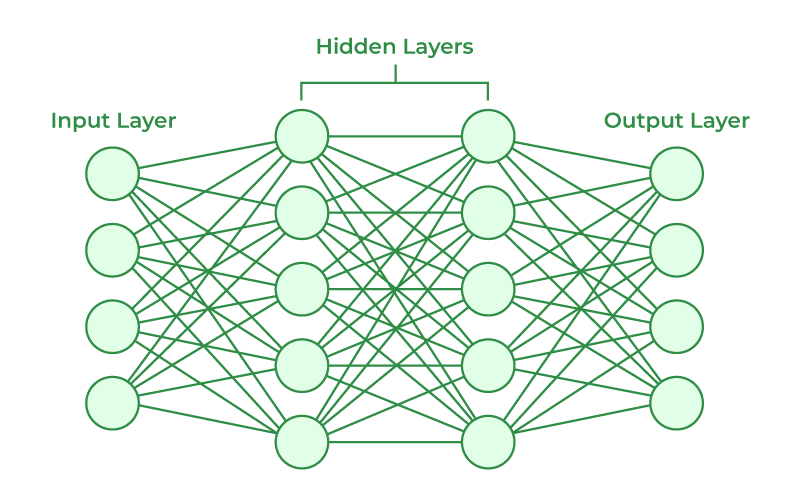

A typical neural network is composed of three main types of layers:

- Input Layer: Receives the raw data (e.g., images, text, sensor readings).

- Hidden Layers: Perform complex computations and feature transformations. Deep neural networks have multiple hidden layers.

- Output Layer: Produces the final prediction or classification.

Each connection between neurons carries a weight that determines the importance of input features. Through training, these weights are adjusted to improve the network's performance.

How Do Neural Networks Learn?

Neural networks learn through a process called training, which involves feeding data into the network and adjusting weights to minimize errors. This process typically uses an algorithm called backpropagation combined with an optimization method like gradient descent.

Here's a simplified overview:

- Forward Pass: Data moves through the network, generating a prediction.

- Error Calculation: The network compares its prediction with the actual label (e.g., image label).

- Backward Pass: The network adjusts the weights to reduce the error, propagating errors backward from the output layer to earlier layers.

- Update Weights: Using the error gradient, the weights are updated iteratively until the network performs well on the training data.

Types of Neural Networks

Neural networks come in various architectures, each suited for different tasks:

- Feedforward Neural Networks: The simplest form; data moves forward only.

- Convolutional Neural Networks (CNNs): Excels in image recognition and processing spatial data.

- Recurrent Neural Networks (RNNs): Designed for sequential data like text, speech, or time series.

- Transformers: Advanced models for natural language processing, like GPT.

Applications of Neural Networks

Neural networks are at the heart of many modern AI applications:

- Image and Video Recognition: Face recognition, medical image analysis.

- Natural Language Processing: Language translation, chatbots, sentiment analysis.

- Speech Recognition: Voice assistants, transcription services.

- Autonomous Vehicles: Real-time object detection and decision-making.

- Recommender Systems: Personalized content and product suggestions.

Challenges and Future of Neural Networks

Despite their impressive capabilities, neural networks face challenges such as:

- Data Requirements: Large datasets are needed for training.

- Computational Power: Training deep networks requires significant resources.

- Interpretability: Understanding how neural networks arrive at decisions remains difficult.

Research continues into making neural networks more efficient, transparent, and accessible. Innovations like transfer learning, unsupervised learning, and explainable AI are paving the way for broader applications.

Conclusion

Neural networks have revolutionized AI by enabling machines to learn from data as humans do, albeit in a different way. As technology advances, neural networks will become even more integrated into our daily lives, powering smarter, more intuitive system

Whether you're a developer, researcher, or enthusiast, understanding neural networks is key to unlocking the future of intelligent technology.